Dear readers, With the launch of e-newsletter CUHK in Focus, CUHKUPDates has retired and this site will no longer be updated. To stay abreast of the University’s latest news, please go to https://focus.cuhk.edu.hk. Thank you.

The Perilous Gift of Life

The law and ethics of AI personhood

Advances in AI have led to ideas of affording machines the rights and obligations of a human person, but what we really should be talking about is our own responsibilities as developers and users of AI. In the fourth installment of our series on AI, we consider the legal and ethical debates surrounding AI personhood.

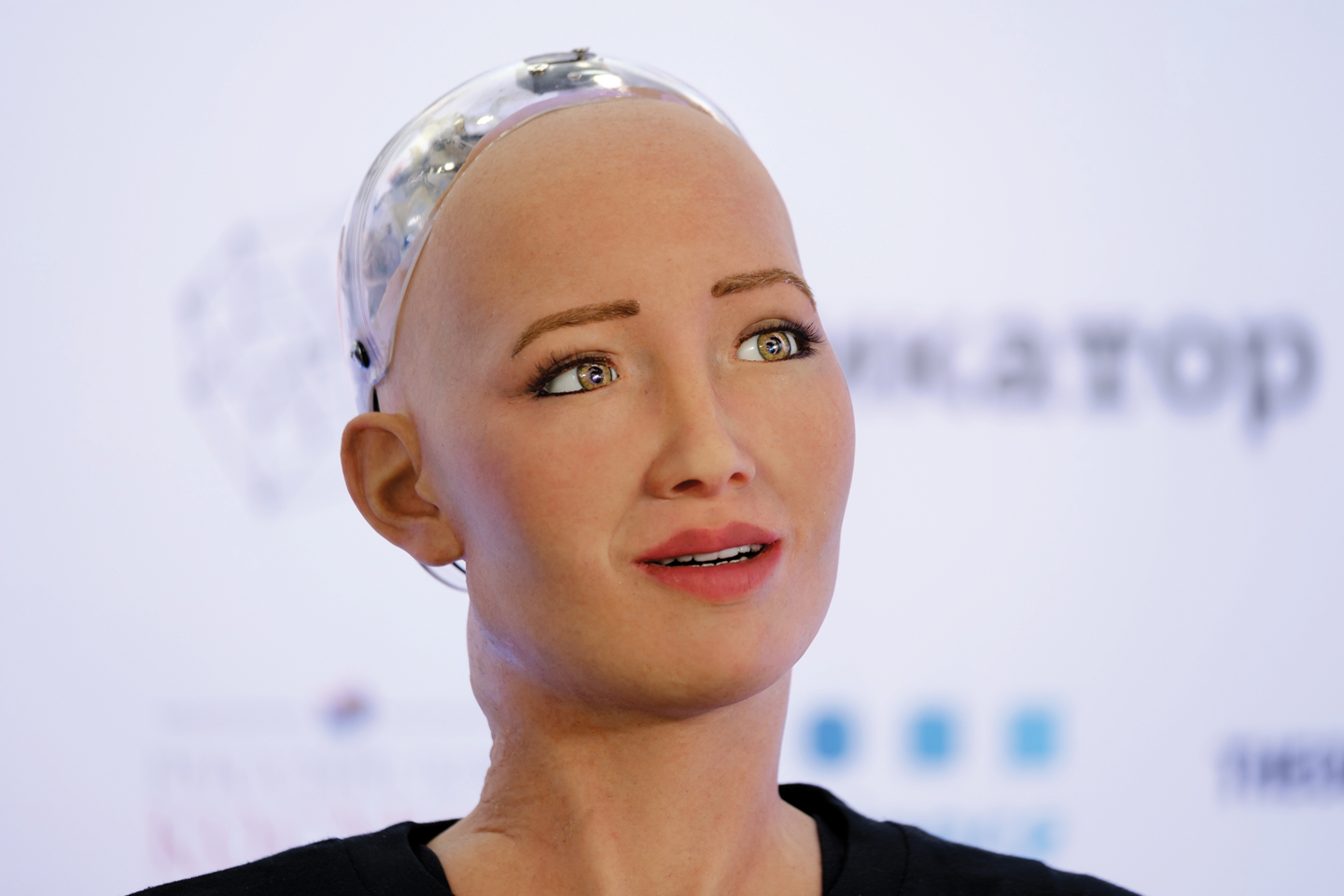

‘I’ve met Sophia. She’s amazing,’ said Prof. Eliza Mik, quickly realizing how she, too, could not avoid anthropomorphizing the robot, which was famously made a Saudi Arabian citizen in 2017. ‘But she’s just a piece of rubber.’

To learn, to grow, to catch up with us—such is the rhetoric we like to use to talk about machines. Indeed, it looks like they are becoming one of us with their constantly increasing capabilities to process the world and respond to it in ways that have real impact on our lives, thanks to AI. The reasonable next step, it seems, would be to subject them to the same ethics that govern us. But while it may be an interesting philosophical question, said the technology law specialist at the Faculty of Law, it is really a moot point on a pragmatic, legal level.

‘It’s completely misconceived.’

There is indeed room for debate as to whether machines can be a person in ethical terms. Depending on the conditions one imposes on moral personhood, according to Prof. Alexandre Erler of the Department of Philosophy, it can be argued that a machine with AI is a moral agent.

‘There’s the more demanding notion that to be a moral agent, you need to have characteristics like having a mind and such mental states as desires, beliefs and intentions. The extreme would be adding things like consciousness to the list,’ says the scholar of the ethics of new technologies. If these are the conditions for being a moral agent, he continued, then no existing machine will qualify. However, there is a less demanding school of thought.

‘The idea is that as long as the machine has a certain flexibility within a pre-programmed framework and the choices it makes have moral significance, like a decision to save a life, it is a moral agent.’ One might argue whatever the machine does, it is still driven by the goal set by humans. However, it may also be argued that humans themselves are ultimately motivated by external factors like genetics in each of their actions. If this less stringent view is correct, then we are already seeing or could very soon be seeing machines with moral agency, examples being self-driving cars and autonomous weapons.

But even if a machine can be somewhat of a moral agent on a theoretical level, it is another story in the real world. In 2017, the European Parliament contemplated a proposal for AI machines to be made legal persons considering how they can cause human injuries and deaths as machines like industrial robots have. As Professor Mik pointed out, though, the proposal was plainly impractical.

‘Just use your common sense. Let’s say this machine that assembles cars is granted legal personhood. What next? It doesn’t have money, it doesn’t feel sorry if it kills a human, and you can’t put it in prison—it’s just a machine,’ said Professor Mik, noting how personhood goes hand in hand with liability. While non-humans like companies have been recognized as legal persons, it is always predicated on the fact that the entity can compensate for its actions, something machines are simply incapable of doing. And in any case, it is unnecessary to hold the machine accountable.

‘The robot has an owner. If the robot misbehaves and causes any form of damage, the owner can pay. And instead of granting personhood to the robot, just ask the owner to take extra insurance. Problem solved,’ said Professor Mik.

It is similarly counterproductive to grant machines rights. In the previous installment, we took a look at the Luxembourg-developed virtual composer AIVA, which has been given copyrights. In theory, it may be justified to make a creative AI program a copyright holder provided the developers and all the humans needed to fine-tune its work are also duly credited as Professor Mik reminded us. Although its output would inevitably be an amalgamation of pre-existing human works as we have seen, the same can be said of our own artistic productions. As long as its work departs considerably from its predecessors by the standard we judge a human work, it could be copyrighted. But again, it is unhelpful in practice to give machines copyrights or any rights for that matter when, ultimately, it will have to be the human user or developer that receives the actual benefits.

‘In a nutshell, there’s no advantage in recognizing AI as a legal person,’ Professor Mik said. ‘Sophia’s just a really nice publicity stunt after all.’

While seeing how machines may qualify as moral agents in theory, Professor Erler also stressed the realistic problem of machines being unable to pay for their actions with the current level of AI. But perhaps the real danger of all the talk of giving machines personhood is how it obscures the fact that however free they might be, as both Professor Erler and Professor Mik noted, machines are confined within a larger modus operandi, determined by none other than humans. Whether we are dictating to them what principles to follow or, in the case of AI, let them figure them out by observing how we deal with certain situations, humans are at the heart of every action they take. And above all, it is always us who initiate a task and set their goals. Rather than trying to elevate their status and toning down our role, we should focus on how we can use them more responsibly, starting by knowing when we must answer for what a machine causes.

‘It’s possible for us to feel less responsible as we delegate more and more tasks to machines. Whether it’s justified to feel that way will depend on the state of the technology,’ said Professor Erler, using the 2009 crash of Air France Flight 447 as an example. One explanation of the tragedy is that the aircraft’s automated system stopped working, which goes to show that as much as the operation of the modern aircraft has been automated, the system can still fail. Where it cannot be reasonably believed that the machine works all the time as in this case, the human should stay vigilant and be ready to take over. In the crash, however, the pilots got confused, being unprepared for the system’s failure. This is one of those situations where the human user must be held accountable for what the machine does.

‘If the machines keep getting better and they become way more reliable than we are at certain tasks, then the users might be justified not to feel responsible for the machines’ judgements. It would actually be more reasonable not to step in,’ said Professor Erler.

But for now, we will have to take care not to put all our trust in it, not least because many AI systems are black boxes as we have seen earlier in our series. The fact that we do not always know the rationale behind a machine’s decisions leads to an important ethical question: is it at all responsible to use something we do not fully understand? Professor Erler said it may be justified if the machine consistently delivers good results and the outcome is the only thing that matters like in a game of chess, where all we care is probably to have a worthy opponent. In cases where procedural justice is crucial, though, such as using AI to predict recidivism, we will have to do better than blindly following the machine’s advice.

‘What you’d ideally expect is a list of reasons from the machine. That doesn’t mean you have to know all the technical details behind it, and you might not be able to. As long as it gives you a justification, you can go on to evaluate it and decide if it’s any good,’ said Professor Erler, hinting again at humans’ irreplaceable role in the age of AI.

Another weakness of AI we must bear in mind is the bias it inherits from the data we use to train it on as discussed previously. We have seen that a machine feeding on incomplete knowledge can lead to real, lived societal harm aside from producing uninspired art. Worst of all, the bias may go unnoticed with machines exuding a veneer of neutrality. Speaking of the ethics of autonomous vehicles, Professor Erler brought up a particularly chilling example of such harm.

‘There’ve been surveys on how the moral principles guiding a self-driving car may differ around the world. Some societies seem to think that people of higher social status are more important morally and deserve more protection. Do we want our cars to act upon these sorts of beliefs?’

For as long as machines continue to work under our influence, we will need to address their bias as responsible developers and users. A big and diverse data set for them to feed on will of course be imperative, but people other than those involved in building and training them—ordinary citizens like ourselves—also have a role.

‘One thing we could do is to report instances of bias when we think we’ve encountered them, and that can contribute to a conversation,’ said Professor Erler, citing the automated recruiting system that carried forward the gender disparity at Amazon and rejected applications from women. ‘In some cases, there may be no bias after all and some groups do fit better in certain areas, but in others the bias is real. If there’s a discussion and an awareness of the problem, there will be an incentive for it to be rectified like in Amazon’s case, where they stopped using the system after becoming aware of the issue.’

‘I love science fiction,’ said Professor Mik, who is certainly not unfamiliar with the trope of robots becoming human and superhuman in all the films she has watched and rewatched. But when asked about the prospect of sentient machines being created in reality and how that might change our view on AI personhood, she was quick to brush the idea off.

‘When we’re that far, we’re going to have bigger problems than AI personhood. We’ll probably not be around. In any case, you’ll know what level of technological progress we’ve reached when you actually read the literature written by those involved in developing the technology.’ And as other AI researchers have pointed out, there is no reason why we would want to invest in making sentient machines when the very purpose of having machines in the first place is to make them serve us as we please.

‘What does it give you to create a robot that feels? It only gives you trouble. You can’t talk back to your Alexa anymore,’ said Professor Mik.

Professor Erler agrees it is a remote prospect, but he suggested how some of these more speculative scenarios might be worth thinking about on a philosophical level as his former colleague at Oxford Dr. Toby Ord does in his book The Precipice.

‘The argument is that if we wait until AI reaches that level of development, it would probably be too late. We would no longer be able to place constraints on its design and prevent catastrophes.’

It is always interesting to get ahead of ourselves. In fact, it is important that we do—how else can we know beyond this narrow slice of existence we call the present? But the present has its own problems, pressing ones indeed. When it comes to our current day-to-day negotiations with AI, there is a broader truth to what Professor Mik said as a fan of sci-fi:

‘Keep sci-fi away from law.’

By jasonyuen@cuhkcontents

Photos by ponyleung@cuhkimages and amytam@cuhkimages

Illustration by amytam@cuhkimages