An Eagle Eye for Smart Diagnoses

A record-high number of physicians left Hong Kong’s public hospitals last year with a turnover rate of 5.9%. Radiology and pathology are two of the worst-hit departments. With medical images playing an increasingly critical role in diagnoses (IBM estimated that medical images account for 90% of all medical data), the workload of those responsible for the interpretation of the images will only get heavier. To relieve their workload and to enhance diagnostic accuracy, the research team led by Prof. Heng Pheng-Ann has developed an artificial intelligence (AI) platform for automated medical image analysis.

Deep learning is a subset of AI, which uses convolutional neural network (CNN) algorithms for image computing to identify patterns in data. ‘It mimics how our brains receive visual stimuli from the eyes to construct meaningful output. The AI platform will analyse and interpret the data collected, following the instructions of the clinicians or the platform engineers,’ explains Professor Heng of CUHK’s Department of Computer Science and Engineering. ‘It’s like the Go-playing programme AlphaGo which develops winning strategies itself, having been exposed to enough data for algorithm training.’

When the automated screening and analysis system is adopted by the medical sector, it may act as the doctor’s tireless assistant to efficiently and accurately identify the source of an illness, enabling a timely and appropriate treatment. The AI platform developed by Professor Heng’s team has been validated on lung cancer and breast cancer, two of Hong Kong’s most prevalent cancers, achieving accuracies of 91% and 99%, respectively. ‘An increasing number of new cancer cases are diagnosed in recent years. A process that used to take 15 minutes or more can now be dramatically reduced for timely and accurate treatment, which could be life-changing for many,’ says Professor Heng.

Physicians’ Unerring Assistant

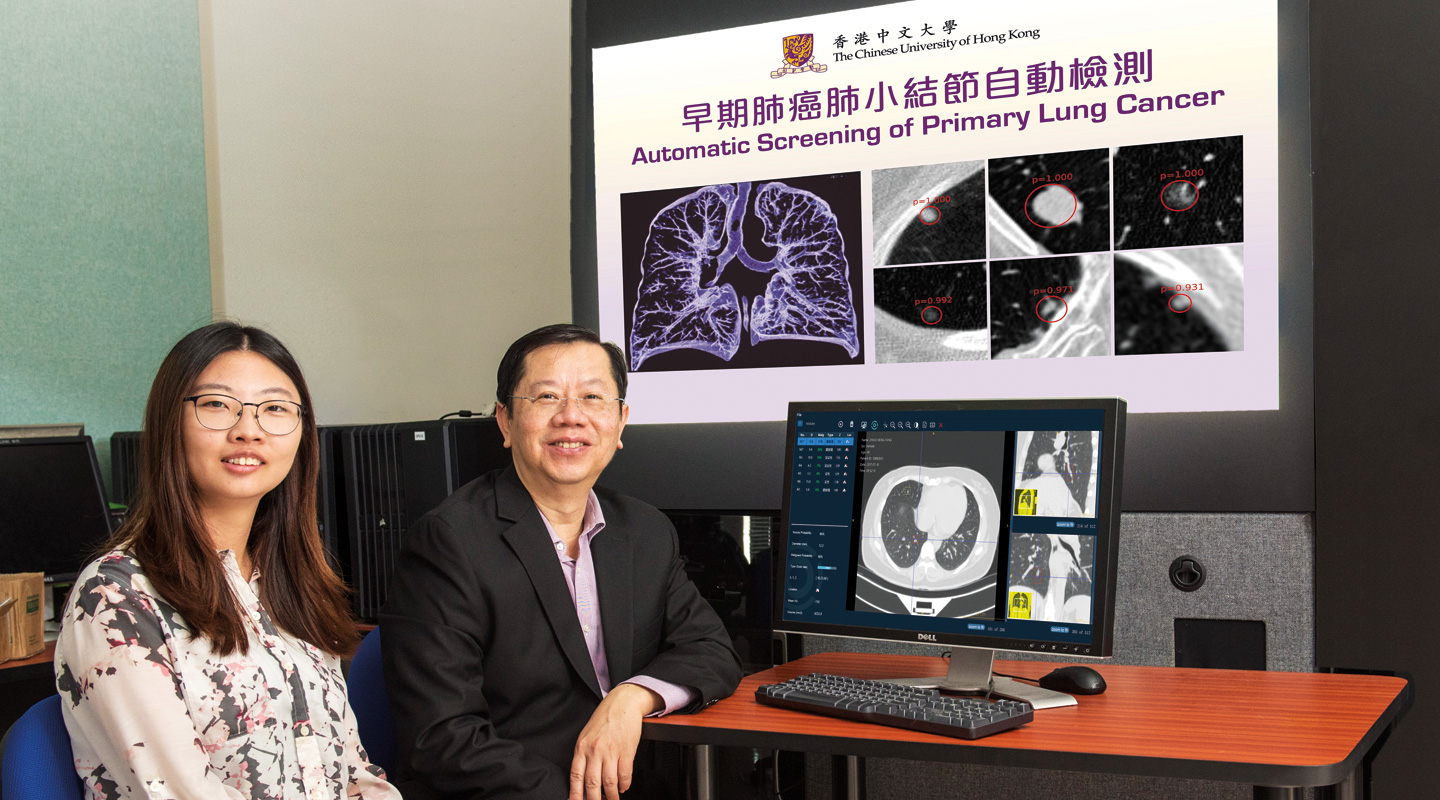

Early stage lung cancer exists in the form of pulmonary nodules, which appear as small shades on CT slices. Going through each CT slice by the naked eye takes about five minutes, and its accuracy lies on the radiologist’s experience and attentiveness. The team designed a 3D CNN that incorporates the structural characteristics of thoracic CT slices and is able to locate any suspicious nodule in 30 seconds. ‘3D CNN overcomes the challenges of analysing volumetric medical images such as CT and MRI images. Our team is the pioneer in 3D CNN and the results have been recognized in some international challenges,’ says Dou Qi, a PhD student of Professor Heng.

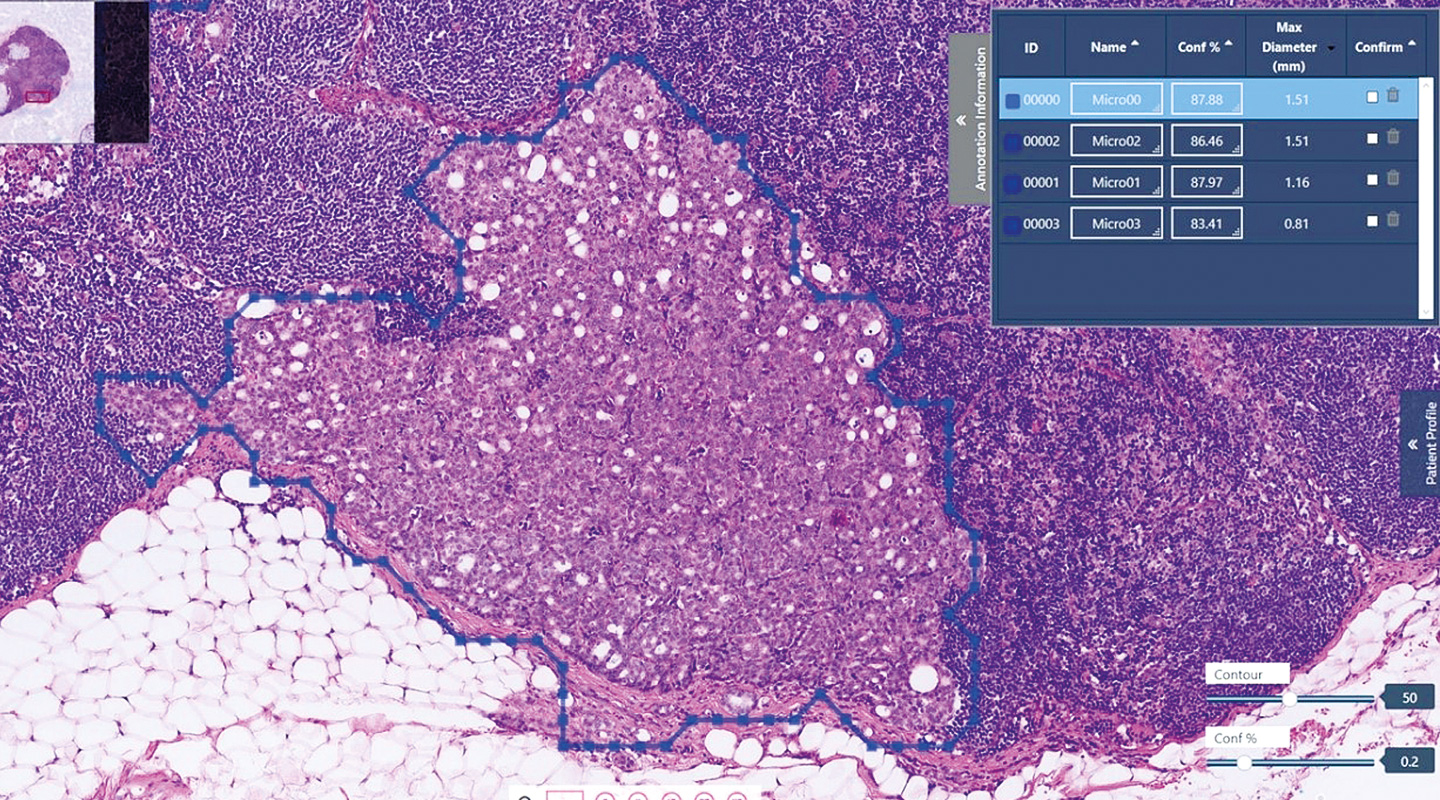

The only accurate way to diagnose breast cancer, the most prevalent cancer among local women, is to perform a biopsy to closely examine the cells collected. However, a digital histology is often up to one GB in file size──equivalent to a 90-minute high resolution movie. Examining the image requires a reliable system, and is both time and energy consuming. The CNN model developed by the team enables automatic detection of the cancer cell in five to 10 minutes, compared to the duration of 15 to 30 minutes by naked eye. The system is 60 times faster than the state-of-the-art method and outperforms an experienced pathologist by 2%.

Identifying false positives among the pulmonary nodules is one of the biggest challenges, as many false positives look similar to the nodules. ‘We therefore constructed an online sample filtering scheme to select the suspected samples to train the AI platform and enhance its screening capability. We also applied a deep learning model to annotate and locate the exact pulmonary nodules,’ Professor Heng elaborates. Their paper presenting the deep learning technique won the Medical Image Analysis Best Paper Award in the International Conference on Medical Image Computing and Computer Assisted Intervention 2017.

The team started the medical image analysis project in 2013, with samples collected from over 5,000 patients of various nationalities. ‘We pay due respect to the patients’ privacy. Their names and personal information had been removed before the data were analysed,’ Dou Qi says. With positive feedback from the medical sector, Professor Heng expects the deep learning system to be widely adopted in the next couple of years. ‘We will work on more prevalent cancers such as cervical cancer and nasopharyngeal carcinoma (NPC). We’re working, for instance, with Sun Yat-sen University Cancer Center on AI-assisted delineation of the targeted area for NPC radiotherapy. The system can finish the delineation in less than a minute.’

University–Industry Collaboration

The AI technology translates into a much-needed boost to quality health care. As of February 2017, there were 106 AI startups in health care globally, including ImSight Medical Technology. The startup was incubated by CUHK’s Virtual Reality, Visualization and Imaging Research Centre, headed by Professor Heng. He is the co-founder and principal scientist of the startup.

ImSight Medical Technology’s endeavour on developing medical image analysis software and scalable platforms for automated processing of medical images has drawn investors’ attention. Shenzhen Capital Group led a RMB60 million round A investment in ImSight Medical in late March this year. In its initial operation period in 2017, the startup received funding from the Hong Kong Innovation and Technology Commission and RMB20 million investment from Lenovo Capital and Incubator Group. China’s medical imaging market is growing at 30% per year, but the number of trained radiologist is growing at only 4.1% per year. The commercialization of technology can narrow the gap between supply and demand.

In February 2018, ImSight Medical Technology entered into partnership with CUHK’s Shenzhen Research Institute for research and development. ‘The University can focus on research development and grooming technology experts, while the company can build strategic collaborations with industry partners and develop more products,’ Professor Heng says. ‘Big data is transforming medicine. We’re in the new era seeing how the medical diagnoses are optimized by data-driven insights. Together with the healthcare professionals, we’re producing the best outcomes for patients.’

J. Lau

This article was originally published in No. 516, Newsletter in Apr 2018.